Google Cast Remote Display Plugin for Unity

Posted by Leon Nicholls, Developer Programs Engineer

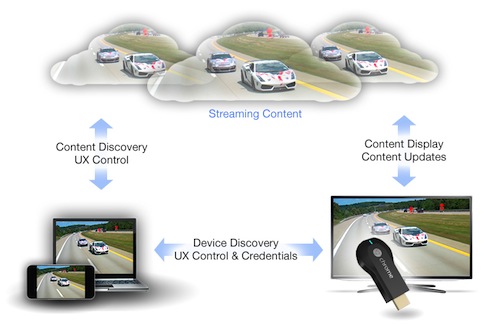

Today we launched the Google Cast Remote Display plugin for Unity to make it easy to take your Unity games to TVs. The Google Cast Remote Display APIs use the powerful GPUs, CPUs and sensors of your Android or iOS mobile device to render a local display on your mobile device and a remote display on your TV.

Unity is a very popular cross-platform game development platform that supports mobile devices. By combining the Google Cast Remote Display technology with the amazing rendering engine of Unity, you can now develop high-end gaming experiences that users can play on an inexpensive Chromecast and other Google Cast devices.

Games Using the Plugin

We have exciting gaming apps from our partners that already use the Remote Display plugin for Unity, with many more coming shortly.

Monopoly Here & Now is the latest twist on the classic Monopoly game. Travel the world visiting some of the world’s most iconic cities and landmarks and race to be the first player to fill your passport with stamps to win! It's a fun new way to play for the whole family.

Risk brings the original game of strategic conquest to the big screen. Challenge your friends, build your army, and conquer the world! The game includes the classic world map as well as two additional themed maps.

These games show that you can create games that look beautiful, using the power of a phone or tablet and send that amazing world to the TV.

Add the Remote Display Plugin to Your Game

You can download the Remote Display plugin for Unity from either GitHub or the Unity Asset Store. If you have an existing Unity game, simply import the Remote Display package and add the CastRemoteDisplayManager prefab to your scene. Next, set up cameras for the local and remote displays and configure them with the CastRemoteDisplayManager.

To display a Cast button in the UI so the user can select a Google Cast device, add the CastDefaultUI prefab to your scene.

Now you are ready to build and run the app. Once you connect to a Cast device you will see the remote camera view on the TV.

You have to consider how you will adapt your game interactions to support a multi-screen user experience. You can use the mobile device sensors to create abstract controls which interact with actions on the screen via motion or touch. Or you can create virtual controls where the player touches something on the device to control something else on the screen.

For visual design it is important not to fatigue the player by making them constantly look up and down. The Google Cast UX team has developed the Google Cast Games UX Guidelines to explain how to make the user experience of cast-enabled games consistent and predictable.

Developer Resources

Learn more about Google's official Unity plugins here. To see a more detailed description on how to use the Remote Display plugin for Unity, read our developer documentation and try our codelab. We have also published a sample Unity game on the Unity Asset Store and GitHub that is UX compliant. Join our G+ community to share your Google Cast developer experience.

With over 20 million Chromecast devices sold, the opportunity for developers is huge, and it’s simple to add Remote Display to an existing Unity game. Now you can take your game to the biggest screen in millions of user’s homes.

By Paul Kinlan, Chrome Developer Relations

By Paul Kinlan, Chrome Developer Relations

By Peter Lubbers, Program Manager and MOOC Manufacturer

By Peter Lubbers, Program Manager and MOOC Manufacturer

By Max Heinritz, Product Manager and (Tolkien) Troll Evader

By Max Heinritz, Product Manager and (Tolkien) Troll Evader

By Pete LePage, Developer Advocate and Boardwalk King

By Pete LePage, Developer Advocate and Boardwalk King

By Christian Stefansen, Native Client Team

By Christian Stefansen, Native Client Team